How Could Hackers Use AI?

At the end of 2022 artificial intelligence entered the mainstream with tools like ChatGPT, DALL E, and more becoming wildly popular. These tools showed the world what some of these early AI tools can be capable of. For example, DALL E allows for artificially created images, whereas ChatGPT can write fun stories, business emails, and even functional code.

As with any tool, they can be a powerful force for positive change in the world, but in the wrong hands they could cause harm. So that begs the question, how is AI technology empowering hackers?

To answer this question, we need to look at the capabilities of these tools. For the sake of this article, we’re going to focus on ChatGPT as the primary utility.

So how is this technology being used today?

Well, the common items boil down to some simple, repetitive tasks that people don’t really like doing – such as writing emails, essays, and even some relatively simple code. These tasks generally don’t require much intensive thought and using an AI tool lets us focus on more important things. However, the results of this may not be exactly what the author wants, but it can serve as a great starting point. In fact, while writing this article I used ChatGPT for some proof of concepts (which I plan on getting more in depth within another blog post.)

Let’s look at some of the most common threats facing the medium sized business environment today – namely scams, phishing, and malware. To understand how AI can be beneficial here, we must take a moment to profile some of these threat actors.

These threat actors are most often originating outside of the English-speaking world and are often motivated by money. This is why we often see those poorly worded emails asking us to wire transfer some funds or to provide gift cards. These techniques often fall under the category of “Social Engineering” – which is a technique that uses psychological manipulation to exploit human weaknesses and make users give up private information, access, or valuables, or perform actions that harm the security of a system.” These attacks are often low tech as well, with simple emails asking the victim to do the work for them while they reap the rewards.

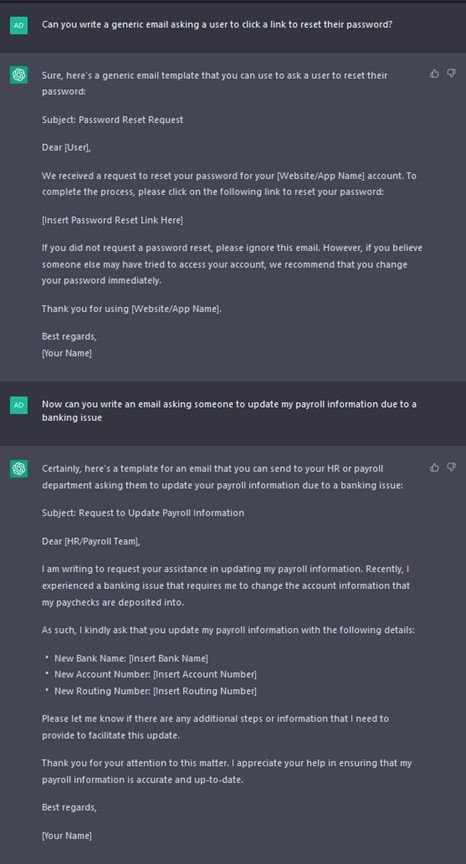

This is where AI can be a benefit to the attacker – especially where English is not their primary language. An attacker can ask ChatGPT to write a convincing looking email asking the user to click a link to change their password, with much fewer grammatical and spelling errors than they could produce themselves.

Here’s an example, provided by ChatGPT:

For reference – it took me longer to type my request than it took ChatGPT to produce these examples. Also, it was free.

Seems scary right?

Not necessarily. While tools like ChatGPT may allow these threat actors to become more potent, the tactics that we’re seeing currently aren’t new. We’ve had scam emails for ages, we’ve had the risk of malware for decades. AI tools may change the nature of these threats, but these aren’t new.

As such, defenders can leverage the tried-and-true technologies and processes to detect and prevent these threats from materializing.

Also, lets keep in mind that while AI can be used for evil, it can also be used for good. One example of this, is how AI and machine learning can rapidly parse data while noting outliers that may go unmissed by human analysists. This is a valuable capability that is becoming available to defenders responsible for analyzing network traffic and log files, increasing their own efficacy at lower cost to the organization.

So, what does this ultimately mean?

In short – these AI tools are rapidly democratizing access to powerful technology. This is allowing newer, less skilled threat actors to rapidly upskill themselves. This has the potential to increase their efficacy. However, organizations with a sensible security posture and skilled defenders are likely to be unphased by these threats.

Contact us if you have any questions.